This application implementats Alphafold3 on Biowulf. In addition to providing a convenient wrapper to run alphafold3 itself it also includes some tools (for example: convert fasta files into the required json input format and validate json input file formats) implemented by us.

Please also check our general guidance on alphafold-related applications.

af3af3 convert to convert basic fasta files with different types of sequences into af3 input json

files.lscratchALPHAFOLD3_MOUNTSALPHAFOLD3_IMAGE$ALPHAFOLD3_TEST_DATA/fdb/alphafold3/module load alphafold3/3.0.1_largemem af3 convert only recognize SMILES sequence in .fa file for ligand. Altertively, ligand could be manually added through either standard or user-provided CCD codes. Check detailed input documentation for ways to specify ligands in .json files and modifications on protein, DNA and RNA sequences.Allocate an interactive session on a CPU node for generating MSAs.

Sample session (user input in bold) using the biowulf af3 wrapper:

[user@biowulf]$ sinteractive --mem=80g --cpus-per-task=12 --gres=lscratch:20

salloc.exe: Pending job allocation 46116226

salloc.exe: job 46116226 queued and waiting for resources

salloc.exe: job 46116226 has been allocated resources

salloc.exe: Granted job allocation 46116226

salloc.exe: Waiting for resource configuration

salloc.exe: Nodes cn3144 are ready for job

[user@cn3144 ~]$ module load alphafold3

[user@cn3144 ~]$ af3 --help

Usage: af3 {convert,validate,run} [-h] [--completion COMPLETION]

Subcommands

convert Convert a set of fasta files to alphafold3 json input files.

validate Validates alphafold3 json format input files.

run Wrapper for running alphafold3 on Biowulf.

Help

[-h, --help] Show this message and exit.

[--completion COMPLETION] Use --completion generate to print shell-specific completion source. Valid options: generate, complete.

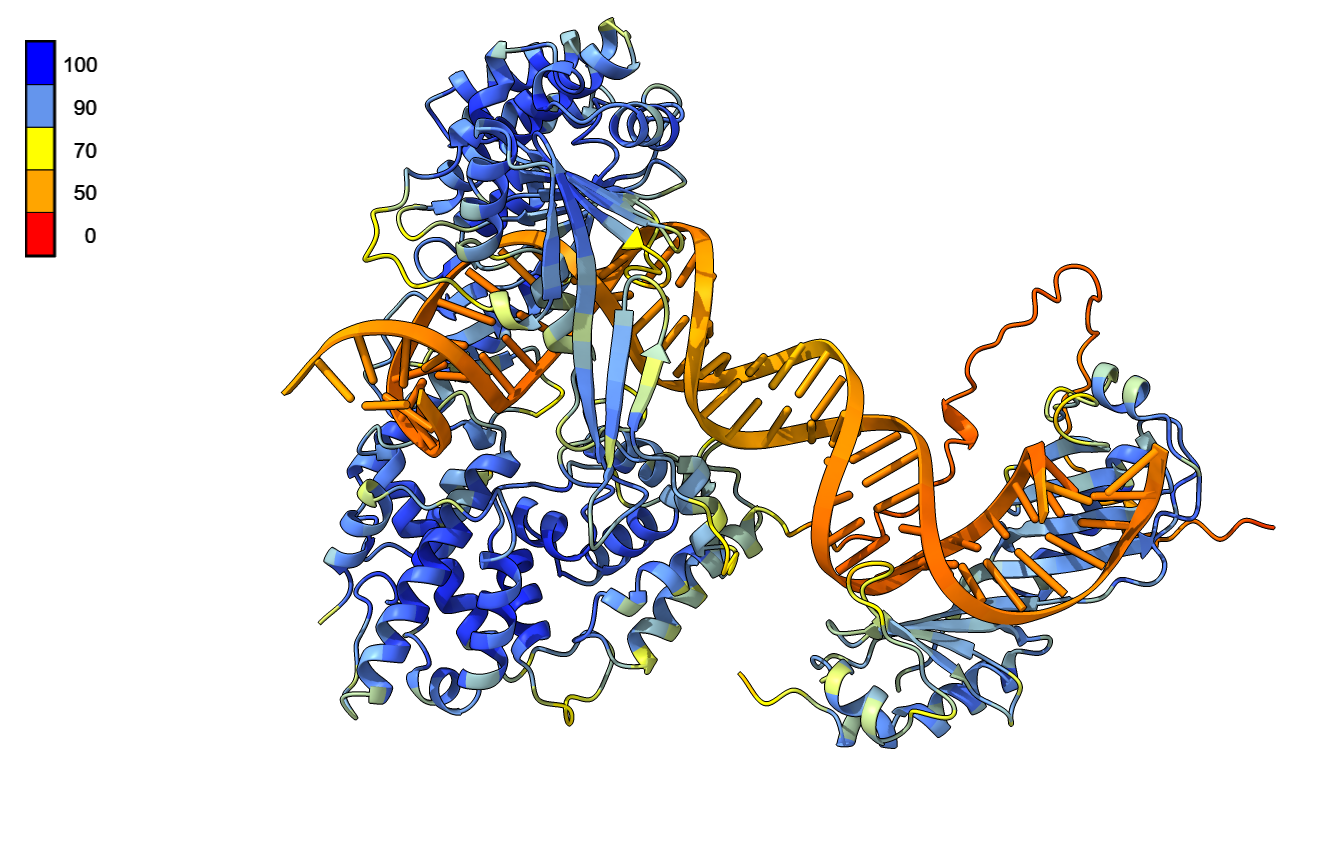

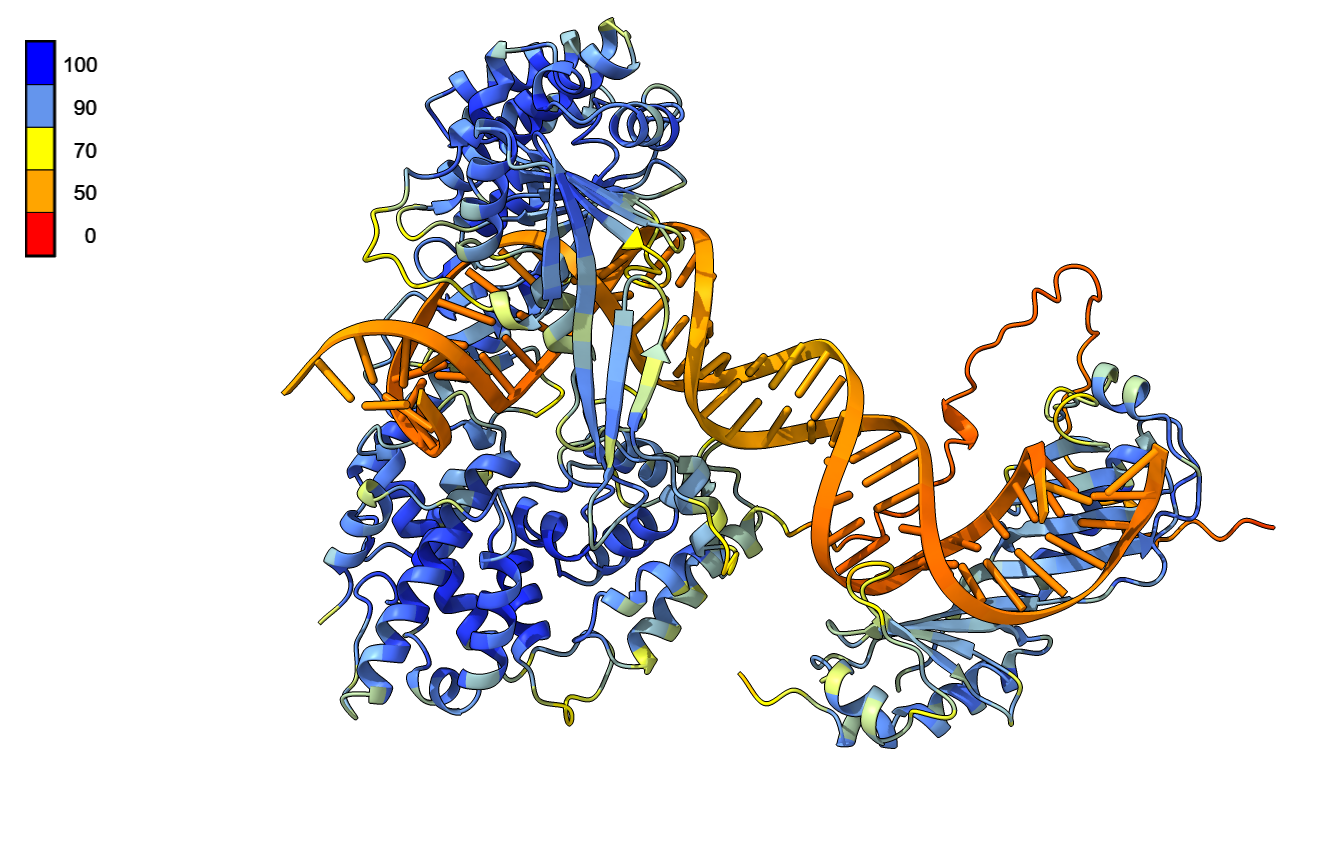

Import a fasta file. In this example the fasta file contains 3 protein

subunits and 2 DNA sequences that form a double stranded fragment. The default

for af3 convert is to assume that all sequences are protein

sequences so we have to use --guess to instruct it to guess

sequence types. Note that specifying multiple seeds will run inference for each

trained model with each random seed. We use --norun_inference to

only run the data processing pipeline in this first step.

[user@cn3144 ~]$ af3 convert --guess --output-dir af3_input --seeds 1,2,3,4,5 $ALPHAFOLD3_TEST_DATA/promo.fa

[23:59:25] INFO processing promo.fa cli_convert.py:86

INFO - P20226: A[protein] cli_convert.py:101

INFO - YP_233025.1: B[protein] cli_convert.py:101

INFO - YP_233009.1: C[protein] cli_convert.py:101

INFO - i1l_intermediate_promoter: D[dna] cli_convert.py:101

INFO - i1l_intermediate_promoter_rc: E[dna] cli_convert.py:101

[user@cn3144 ~]$ af3 validate af3_input/promo.json

[12:05:38] INFO OK: af3_input/promo.json

[user@cn3144 ~]$ cat af3_input/promo.json

{

"name": "promo",

"modelSeeds": [

1,

2,

3,

4,

5

],

"dialect": "alphafold3",

"version": 1,

"sequences": [

{

"protein": {

"id": "A",

"sequence": "MDQNNSLPPYAQGLASPQGAMTPGIPIFSPMMPYGTGLTPQPIQNTNSLSILEEQQRQQQQQQQQQQQQQQQQQQQQQQQQQQQQQQQQQQQQQQAVAAAAVQQSTSQQATQGTSGQAPQLFHSQTLTTAPLPGTTPLYPSPMTPMTPITPATPASESSGIVPQLQNIVSTVNLGCKLDLKTIALRARNAEYNPKRFAAVIMRIREPRTTALIFSSGKMVCTGAKSEEQSRLAARKYARVVQKLGFPAKFLDFKIQNMVGSCDVKFPIRLEGLVLTHQQFSSYEPELFPGLIYRMIKPRIVLLIFVSGKVVLTGAKVRAEIYEAFENIYPILKGFRKTT"

}

},

{

"protein": {

"id": "B",

"sequence": "MDNLFTFLHEIEDRYARTIFNFHLISCDEIGDIYGLMKERISSEDMFDNIVYNKDIHPAIKKLVYCDIQLTKHIINQNTYPVFNDSSQVKCCHYFDINSDNSNISSRTVEIFEREKSSLVSYIKTTNKKRKVNYGEIKKTVHGGTNANYFSGKKSDEYLSTTVRSNINQPWIKTISKRMRVDIINHSIVTRGKSSILQTIEIIFTNRTCVKIFKDSTMHIILSKDKDEKGCIHMIDKLFYVYYNLFLLFEDIIQNEYFKEVANVVNHVLTATALDEKLFLIKKMAEHDVYGVSNFKIGMFNLTFIKSLDHTVFPSLLDEDSKIKFFKGKKLNIVALRSLEDCINYVTKSENMIEMMKERSTILNSIDIETESVDRLKELLLK"

}

},

{

"protein": {

"id": "C",

"sequence": "MFEPVPDLNLEASVELGEVNIDQTTPMIKENSGFISRSRRLFAHRSKDDERKLALRFFLQRLYFLDHREIHYLFRCVDAVKDVTITKKNNIIVAPYIALLTIASKGCKLTETMIEAFFPELYNEHSKKFKFNSQVSIIQEKLGYQFGNYHVYDFEPYYSTVALAIRDEHSSGIFNIRQESYLVSSLSEITYRFYLINLKSDLVQWSASTGAVINQMVNTVLITVYEKLQLVIENDSQFTCSLAVESKLPIKLLKDRNELFTKFINELKKTSSFKISKRDKDTLLKYFT"

}

},

{

"dna": {

"id": "D",

"sequence": "TTGTATTTAAAAGTTGTTTGGTGAACTTAAATGG"

}

},

{

"dna": {

"id": "E",

"sequence": "CCATTTAAGTTCACCAAACAACTTTTAAATACAA"

}

}

]

}

[user@cn3144 ~]$ model=/path/to/model/dir

[user@cn3144 ~]$ ls $model

af3.bin.zst

[user@cn3144 ~]$ af3 run --model_dir $model --output_dir=af3_model \

--json_path af3_input/promo.json --norun_inference

Running AlphaFold 3. Please note that standard AlphaFold 3 model parameters are

only available under terms of use provided at

https://github.com/google-deepmind/alphafold3/blob/main/WEIGHTS_TERMS_OF_USE.md.

If you do not agree to these terms and are using AlphaFold 3 derived model

parameters, cancel execution of AlphaFold 3 inference with CTRL-C, and do not

use the model parameters.

Skipping running model inference.

Processing 1 fold inputs.

Processing fold input promo

Running data pipeline...

Processing chain A

[...snip...]

Processing chain A took 1931.94 seconds

Processing chain B

[...snip...]

Processing chain B took 1344.49 seconds

Processing chain C

[...snip...]

Processing chain C took 1353.66 seconds

Processing chain D

Processing chain D took 0.00 seconds

Processing chain E

Processing chain E took 0.00 seconds

[user@cn3144 ~]$ tree af3_model

af3_model/

└── [user 4.0K] promo

└── [user 12M] promo_data.json

1 directory, 1 file

[user@cn3144 ~]$ exit

salloc.exe: Relinquishing job allocation 46116226

[user@biowulf ~]$

For this input the generation of MSAs took approximately 1.3h on a x9454 node. We are working on a tool for batch processing of alignments against reference data in lscratch. The data processing pipeline creates a new json file (see above) which includes the generated multiple sequence alignments. These input files can also be generated by any other data processing pipleine. See the alphafold3 documentation for more detail.

Next, for the model inference, we will use a A100 GPU. A100 or newer GPUs

are required for triton flash attention. On lower GPUs xla attention has to be

used. For inference we use --norun_data_pipeline since we already

generated the MSAs in the previous step.

[user@biowulf]$ sinteractive --mem=20g --cpus-per-task=12 --gres=lscratch:20,gpu:a100:1

salloc.exe: Pending job allocation 46116227

salloc.exe: job 46116227 queued and waiting for resources

salloc.exe: job 46116227 has been allocated resources

salloc.exe: Granted job allocation 46116227

salloc.exe: Waiting for resource configuration

salloc.exe: Nodes cn0073 are ready for job

[user@cn0073 ~]$ module load alphafold3

[user@cn0073 ~]$ af3 run --norun_data_pipeline --json_path af3_model/promo/promo_data.json \

--model_dir $model --output_dir=./af3_model

[...snip...]

Featurising data for seeds (1, 2, 3, 4, 5)...

[...snip...]

Featurising data for seeds (1, 2, 3, 4, 5) took 73.36 seconds.

Running model inference for seed 1...

Running model inference and extracting output structures for seed 1 took 129.86 seconds.

Running model inference for seed 2...

Running model inference and extracting output structures for seed 2 took 100.20 seconds.

Running model inference for seed 3...

Running model inference and extracting output structures for seed 3 took 100.03 seconds.

Running model inference for seed 4...

Running model inference and extracting output structures for seed 4 took 100.11 seconds.

Running model inference for seed 5...

Running model inference and extracting output structures for seed 5 took 100.32 seconds.

Running model inference and extracting output structures for seeds (1, 2, 3, 4, 5) took 530.52 seconds.

Writing outputs for promo for seed(s) (1, 2, 3, 4, 5)...

Done processing fold input promo.

Done processing 1 fold inputs.

[user@cn0073 ~]$ tree af3_model

└── [user 4.0K] promo

├── [user 13K] TERMS_OF_USE.md

├── [user 624] ranking_scores.csv

├── [user 4.0K] seed-1_sample-0

│ ├── [user 9.1M] confidences.json

│ ├── [user 725K] model.cif

│ └── [user 621] summary_confidences.json

[...snip...]

├── [user 4.0K] seed-5_sample-4

│ ├── [user 9.1M] confidences.json

│ ├── [user 725K] model.cif

│ └── [user 616] summary_confidences.json

├── [user 9.1M] promo_confidences.json

├── [user 12M] promo_data.json

├── [user 733K] promo_model.cif

└── [user 618] promo_summary_confidences.json

[user@cn0073 ~]$ cat af3_model/promo/ranking_scores.csv

seed,sample,ranking_score

1,0,0.6383556805710533

1,1,0.6397741633924838

1,2,0.6374833859487241

1,3,0.6302300867657832

1,4,0.6246919848132091

2,0,0.6395896419775849

2,1,0.6211071934342338

2,2,0.6314571421329415

2,3,0.6559208263291473

2,4,0.6197578295821197

3,0,0.6493369799732224

3,1,0.6524003286557849

3,2,0.6585710305194263

3,3,0.6502327801866927

3,4,0.664610744023165

4,0,0.619652988060626

4,1,0.6237174764953402

4,2,0.6348252568772048

4,3,0.6297265192006525

4,4,0.6253496734254855

5,0,0.6508382745016786

5,1,0.6438060137920402

5,2,0.6284501912141062

5,3,0.6349033553455605

5,4,0.6352727254107549

[user@cn0073 ~]$ exit

salloc.exe: Relinquishing job allocation 46116227

[user@biowulf ~]$

Similarly, jobs should be run with two steps: Multiple Sequence Alignment (MSA) on CPU and model inference on GPU.

1. Create a batch script file for MSA (e.g. af3_MSA.sh). For example:

#!/bin/bash ###this is af3_MSA.sh module load alphafold3/3.0.0 ###Convert example .fa file to .json, you could test with your own .fa/.txt/.seq file af3 convert --guess --output-dir af3_input --seeds 1,2,3,4,5 $ALPHAFOLD3_TEST_DATA/promo.fa ###Model file af3.bin.zst should be downloaded from Google and transfered to your directory on Biowulf model=/path/to/your/model_dir af3 run \ --model_dir=$model \ --output_dir=af3_input \ --json_path=af3_input/promo.json \ --norun_inference \ -- --jackhmmer_n_cpu=6

Submit this job using the Slurm sbatch command.

[user@biowulf]$ jid=$(sbatch --cpus-per-task=12 --mem=80g --time=2:00:00 --gres=lscratch:20 af3_MSA.sh) [user@biowulf]$ echo $jid

2. Set up a dependent job to run model inference. Create the following script:

#!/bin/bash ###This is af3_inference.sh module load alphafold3/3.0.0 model=path/to/your/model_dir af3 run \ --model_dir=$model \ --output_dir=predicted_models \ --json_path=af3_input/promo/promo_data.json \ --norun_data_pipeline

Submit this job using the sbatch command:

[user@biowulf]$ sbatch --cpus-per-task=12 --mem=20g --time=2:00:00 --partition=gpu --gres=lscratch:20,gpu:a100:1 --dependency=${jid} af3_inference.sh

# --dependency is only optional if MSA is ready from last step. Check details with: sbatch --help