Jan 8 2016! These nodes have been transitioned to the general pool and are now the ibqdr partition.

The hardware characteristics of each compute node are as follows:

|

NIDDK has funded a number of nodes in the Biowulf cluster, and the Lab of Chemical Physics has priority access to these nodes. This priority status will last until January 7, 2016. There are a total of 206 nodes (3296 cores). Jan 8 2016! These nodes have been transitioned to the general pool and are now the ibqdr partition. The hardware characteristics of each compute node are as follows:

|

Hyperthreading is a hardware feature of the Xeon processor that allows each physical core to run two simultaneous threads of execution thereby appearing to double the number of real cores. Thus the 16-core LCP nodes will appear to have 32 cores. In many cases this will increase the performance of applications that can multi-thread or otherwise take advantage of multiple cores. However before running 32 threads of execution on a single node, the Biowulf staff recommends that you benchmark your application to determine whether it can take advantage of hyperthreading or not. (Or even whether it scales to 16 cores!).

All LCP nodes are connected to a QDR Mellanox Infiniband switch (SX6512) at full-bisection bandwidth. No special properties need to be specified to sbatch to allocate an Infiniband node.

Jobs are submitted to the LCP nodes by specifying the "lcp" partition. In the simplest case,

sbatch --partition=lcp your_batch_script

See slurm or application-specific documentation for suggestions on how to submit multi-node jobs.

To specify a memory size,

sbatch --constraint=g64 --partition=lcp your_batch_scriptor

sbatch --constraint=g32 --partition=lcp your_batch_script

Allocating an interactive node (note: for interactive sesssions specify lcp as a constraint rather than a partition),

sinteractive --constraint=lcp

To submit to swarm,

swarm -f command_file --partition=lcp

There are effectively no per user core limits at this time, however there are walltime limits:

$ batchlim Max Jobs Per User: 10000 Max Array Size: 1001 Partition MaxCPUsPerUser DefWalltime MaxWalltime --------------------------------------------------------------- lcp 9999 10-00:00:00 10-00:00:00

While LCP users have priority access to the LCP nodes, they will be accessible by other Biowulf users by virtue of the existence of a "short" queue (not yet implemented). Nodes not in use by LCP users may be allocated for short queue jobs for up to 4 hours. That is, no LCP job will be queued for more than 4 hours waiting for nodes allocated to short queue jobs.

To see how many nodes of each type are available use the freen command; there is now a separate section which reports the number of available LCP nodes:

$ freen

........Per-Node Resources........

Partition FreeNds FreeCPUs Cores CPUs Mem Disk Features

--------------------------------------------------------------------------------

lcp 100/103 3200/3296 16 32 31g 400g cpu32,core16,g32,sata400,x2600,ibddr,lcp

lcp 92/103 2944/3296 16 32 62g 400g cpu32,core16,g64,sata400,x2600,ibddr,lcp

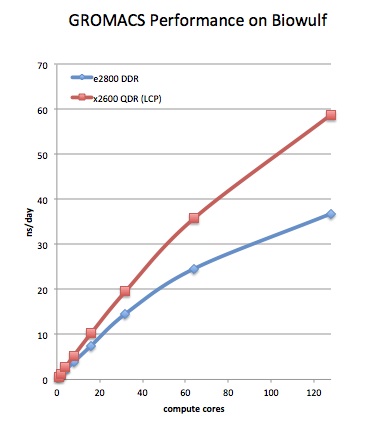

The figure below shows performance on a GROMACS benchmark. The LCP nodes (x2600 QDR) have nearly twice the performance as the previous generation of Infiniband nodes running on Biowulf (e2800 DDR). DDR benchmark was run with 8 processes per node; QDR with 16 processes per node.

Please send questions and comments to staff@hpc.nih.gov