| # nodes | processor cores per node | memory | network | SLURM features |

|

|

||||

| 64 |

96 x 2.75 GHz (AMD Epyc 9454)

hyperthreading enabled

256 MB level 3 cache

|

768 GB

|

200 Gb/s HDR200 Infiniband (2.25:1)

|

e9454,core64,

cpu192,g768,

ssd3200,ibhdr200

|

| 72 |

64 x 2.8 GHz (AMD Epyc 7543)

hyperthreading enabled

256 MB level 3 cache

|

512 GB

|

200 Gb/s HDR200 Infiniband (2.25:1)

|

e7543,core64,

cpu128,g512,

ssd3200,ibhdr200

|

| 243 |

36 x 2.3 GHz (Intel Gold 6140)

hyperthreading enabled

25 MB secondary cache

|

384 GB

|

100 Gb/s HDR100 Infiniband (2.25:1)

|

x6140,core36,

cpu72,g384,

ssd3200,ibhdr100

|

| 1152 |

28 x 2.4 GHz (Intel E5-2680v4)

hyperthreading enabled

35 MB secondary cache

|

256 GB

|

56 Gb/s FDR Infiniband (1.11:1)

|

x2680,core28,

cpu56,g256,

ssd800,ibfdr

|

| 1080 |

28 x 2.3 GHz (Intel E5-2695v3)

hyperthreading enabled

35 MB secondary cache

|

256 GB

|

56 Gb/s FDR Infiniband (1.11:1)

|

x2695,core28,

cpu56,g256,

ssd400,ibfdr

|

|

|

||||

| 76 |

32 x 2.8 GHz (AMD Epyc 7543p)

hyperthreading enabled

256 MB level 3 cache

4 x NVIDIA A100 GPUs (80 GB VRAM, 6912 cores, 432 Tensor cores)

NVLINK

|

256 GB

|

200 Gb/s HDR Infiniband (1:1)

|

e7543p,core32,

cpu64,g256,gpua100,

ssd3200,ibhdr200

|

| 56 |

36 x 2.3 GHz (Intel Gold 6140)

hyperthreading enabled

25 MB secondary cache

4 x NVIDIA V100-SXM2 GPUs (32 GB VRAM, 5120 cores, 640 Tensor cores)

NVLINK

|

384 GB

|

200 Gb/s HDR Infiniband (1:1)

|

x6140,core36,

cpu72,g384,gpuv100x,

ssd1600,ibhdr

|

| 8 |

28 x 2.4 GHz (Intel E5-2680v4)

hyperthreading enabled

35 MB secondary cache

4 x NVIDIA V100 GPUs (16 GB VRAM, 5120 cores, 640 Tensor cores)

|

128 GB

|

56 Gb/s FDR Infiniband (1.11:1)

|

x2680,core28,cpu56,

g256,gpuv100,

ssd800,ibfdr

|

| 48 |

28 x 2.4 GHz (Intel E5-2680v4)

hyperthreading enabled

35 MB secondary cache

4 x NVIDIA P100 GPUs (16 GB VRAM, 3584 cores)

|

128 GB

|

56 Gb/s FDR Infiniband (1.11:1)

|

x2680,core28,cpu56,g256,

gpup100,ssd650,ibfdr

|

| 72 |

28 x 2.4 GHz (Intel E5-2680v4)

hyperthreading enabled

35 MB secondary cache

2 x NVIDIA K80 GPUs with 2 x GK210 GPUs each (24 GB VRAM, 4992 cores)

|

256 GB

|

56 Gb/s FDR Infiniband (1.11:1)

|

x2680,core28,cpu56,g256,

gpuk80,ssd800,ibfdr

|

|

|

||||

| 16 |

86 x 2.75 GHz (AMD Epyc 9454)

hyperthreading enabled

256 MB secondary cache

|

3 TB

|

200 Gb/s HDR Infiniband

|

e9454,core96,cpu192,

g3072,ssd3200,ibhdr200

|

| 4 |

72 x 2.2 GHz (Intel E7-8860v4)

hyperthreading enabled

45 MB secondary cache

|

3 TB

|

56 Gb/s FDR Infiniband (1.11:1)

|

x8860,core36,cpu144,

g3072,ssd800,ibfdr

|

| 20 |

72 x 2.2 GHz (Intel E7-8860v4)

hyperthreading enabled

45 MB secondary cache

|

1.5 TB

|

56 Gb/s FDR Infiniband (1.11:1)

|

x8860,core72,cpu144,

g1536,ssd800,ibfdr

|

An additional 51 service nodes provide system services such as the batch system controller as well as the Biowulf login node. Twenty of these nodes serve as Data Transfer Nodes (DTN) running Globus, parallel GridFTP, and a http/https/ftp proxy.

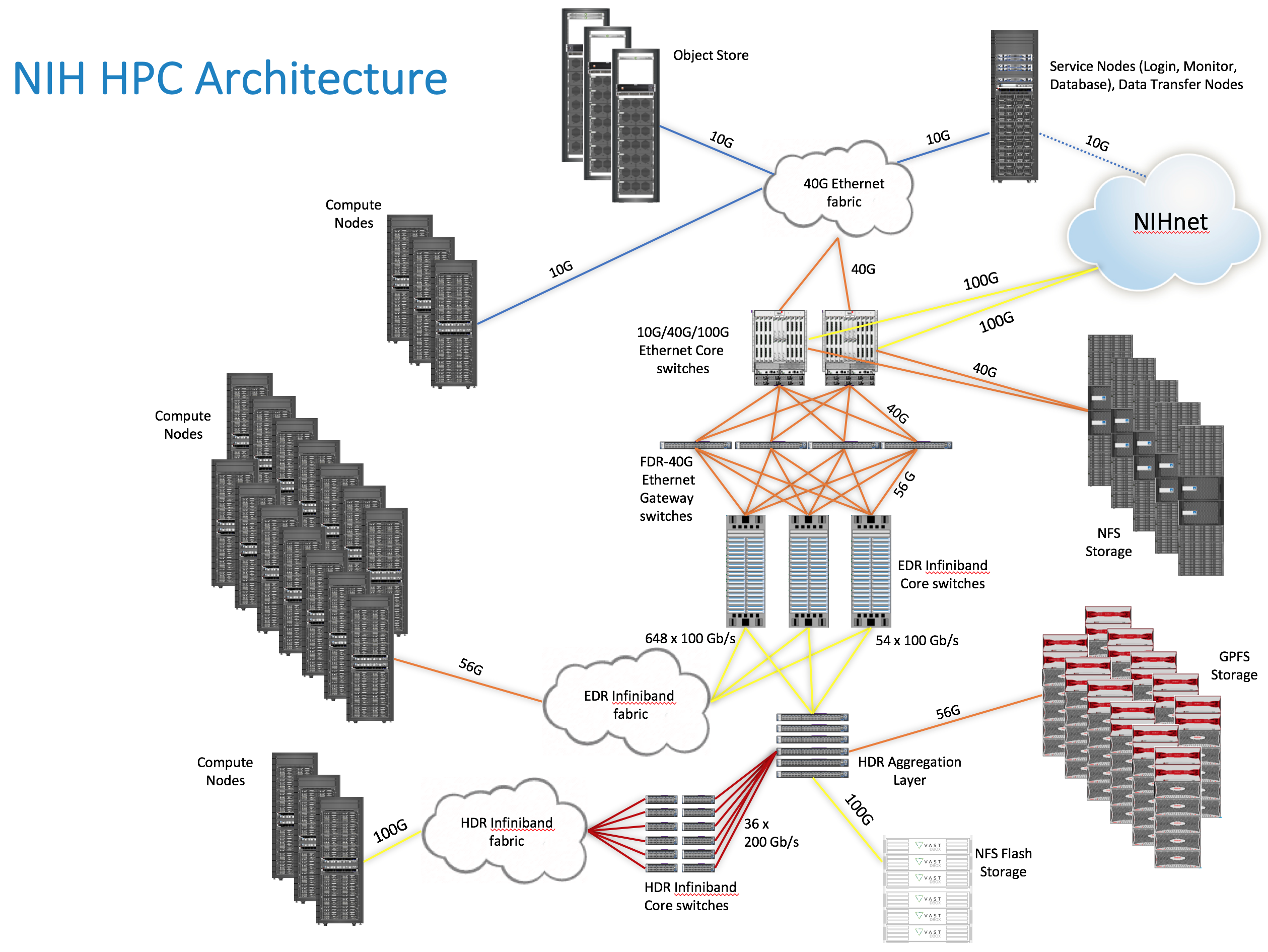

Phase 2-3-4 networking: Nodes are connected via 56 Gb/s FDR Infiniband to EDR leaf switches (100 Gb/s) which connect to an EDR core fabric at 1.11:1 blocking ratio.

Phase 5 networking: Nodes are connected via 100 Gb/s HDR100 Infiniband to HDR leaf switches (200 Gb/s) which connect to HDR spine switches at 2.25:1 blocking. GPU nodes connect at 200 Gb/s HDR to the core (1:1).

Phase 6 and beyond networking: Nodes are connected via 200 Gb/s HDR Infiniband to HDR leaf switches (200 Gb/s) which connect to HDR spineswitches at 2.25:1 blocking.

FDR and HDR fabrics interconnect through HDR "Aggregation layer" switches

NFS/Flash storage connects at 100 Gb/s HDR100; GPFS storage systems are connected via FDR (1:1); NFS storage by 40Gbps Ethernet.

Service nodes (login, etc) have redundant 10G connections to both NIHnet and the clusternet.

Ethernet and Infiniband are connected via two 200G Ethernet to HDR Infiniband Gateways.

Direct connectivity to the NIHnet Core is by redundant 100 Gb/s Ethernet.

Biowulf networking:

Ten high-performance storage systems provide over 35 Petabytes of storage for the Biowulf Cluster.

| storage system | configuration | filesystem | network connectivity | usable storage (TB) |

|

NetApp Cluster

|

2 x FAS9000 controllers

SATA, SSD

|

NFS

|

16 x 10 Gb/s Ethernet

|

450

|

|

2X DDN SFA12KX-40

|

2 controllers

8 fileservers

NL-SAS

SSD metadata

|

GPFS

|

16 x 56 Gb/s FDR Infiniband

|

21600

|

|

DDN SFA18K

|

2 controllers

8 fileservers

NVMe SSD

NL-SAS

SSD metadata

|

GPFS over RDMA (Infiniband)

|

8 x 56 Gb/s FDR Infiniband

|

7600

|

|

VAST

|

144 fileservers

NVMe Flash

|

NFS over RDMA (Infiniband)

|

72 x 100 Gb/s HDR-100 Infiniband

|

34814

|