EMAN2 is the successor to EMAN1. It is a broadly based greyscale scientific image processing suite with a primary focus on processing data from transmission electron microscopes. EMAN's original purpose was performing single particle reconstructions (3-D volumetric models from 2-D cryo-EM images) at the highest possible resolution, but the suite now also offers support for single particle cryo-ET, and tools useful in many other subdisciplines such as helical reconstruction, 2-D crystallography and whole-cell tomography. Image processing in a suite like EMAN differs from consumer image processing packages like Photoshop in that pixels in images are represented as floating-point numbers rather than small (8-16 bit) integers. In addition, image compression is avoided entirely, and there is a focus on quantitative analysis rather than qualitative image display.

This application requires a graphical connection. Please use a Graphical Session on HPC OnDemand

EMAN2 can utilize GPUs to accelerate certain tasks.

[user@biowulf]$ sinteractive --gres=gpu:p100:1 ... ... [user@node]$ module load EMAN2

Allocate an interactive session and run the program. Sample session:

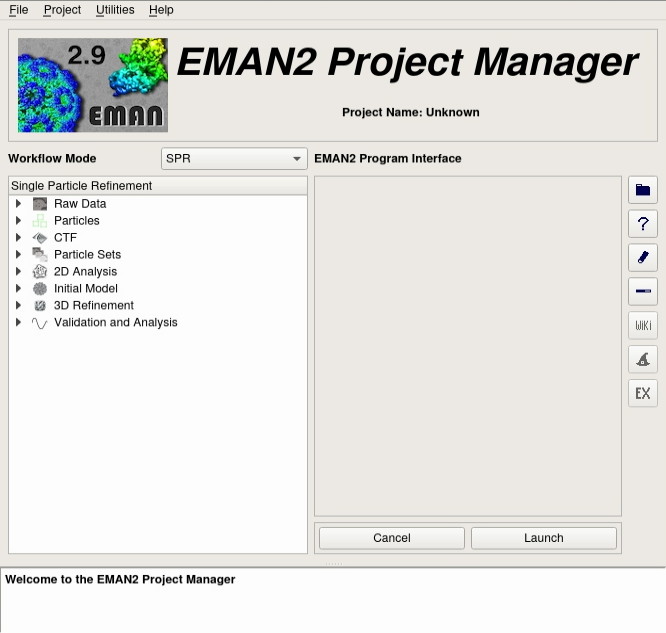

[user@biowulf]$ sinteractive salloc.exe: Pending job allocation 46116226 salloc.exe: job 46116226 queued and waiting for resources salloc.exe: job 46116226 has been allocated resources salloc.exe: Granted job allocation 46116226 salloc.exe: Waiting for resource configuration salloc.exe: Nodes cn3144 are ready for job [node]$ module load EMAN2 [node]$ e2projectmanager.py

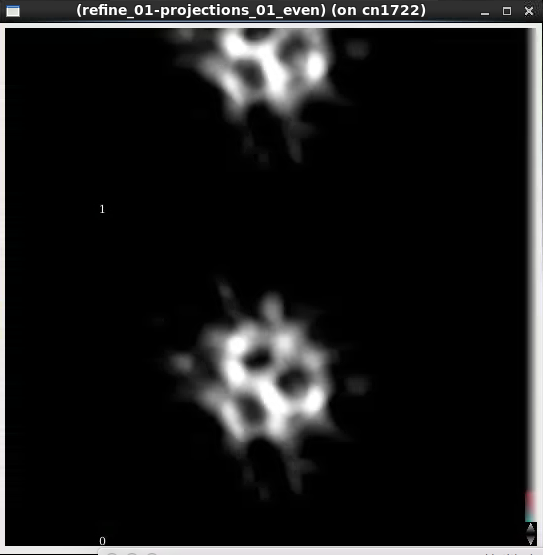

[node]$ e2display.py my_image.hdf

[node]$ exit salloc.exe: Relinquishing job allocation 46116226 [user@biowulf ~]$

Create a batch input file (e.g. EMAN2.sh). For example:

#!/bin/bash

# set the environment properly

module load EMAN2

# always a good practice

export TMPDIR=/lscratch/${SLURM_JOB_ID}

# Run refinement. Make sure to replace the input, output, and reference files,

# as well as any options needed. This command is designed to run on 32 cpus

# threads each and storing temporary files in /lscratch/$SLURM_JOBID.

e2refine.py \

--parallel=thread:${SLURM_CPUS_PER_TASK:=1}:/lscratch/${SLURM_JOB_ID} \

--input=bdb:sets#set2-allgood_phase_flipped-hp \

--mass=1200.0 \

--apix=2.9 \

--automask3d=0.7,24,9,9,24 \

--iter=1 \

--sym=c1 \

--model=bdb:refine_02#threed_filt_05 \

--path=refine_sge \

--orientgen=eman:delta=3:inc_mirror=0 \

--projector=standard \

--simcmp=frc:snrweight=1:zeromask=1 \

--simalign=rotate_translate_flip \

--simaligncmp=ccc \

--simralign=refine \

--simraligncmp=frc:snrweight=1 \

--twostage=2 \

--classcmp=frc:snrweight=1:zeromask=1 \

--classalign=rotate_translate_flip \

--classaligncmp=ccc \

--classralign=refine \

--classraligncmp=frc:snrweight=1 \

--classiter=1 \

--classkeep=1.5 \

--classnormproc=normalize.edgemean \

--classaverager=ctf.auto \

--sep=5 \

--m3diter=2 \

--m3dkeep=0.9 \

--recon=fourier \

--m3dpreprocess=normalize.edgemean \

--m3dpostprocess=filter.lowpass.gauss:cutoff_freq=.1 \

--pad=256 \

--lowmem \

--classkeepsig \

--classrefsf \

--m3dsetsf -v 2

e2bdb.py -cF

Submit this job using the Slurm sbatch command.

sbatch [--cpus-per-task=#] [--mem=#] EMAN2.sh

EMAN2 can be run in parallel using MPI instead of multithreading. This is inherently less efficient than running multithreaded. However, it can increase the performance of EMAN2 if run on multiple nodes, especially if you have a ridiculously huge number of images or particles (> 500K).

Here is an example of an MPI job (e.g. EMAN2.sh):

module load EMAN2

# always a good practice

export TMPDIR=/lscratch/${SLURM_JOB_ID}

# Here is the command

e2refine_easy.py --input=starting.lst \

--model=starting_models/model.hdf \

--targetres=8.0 --speed=5 --sym=c1 \

--tophat=local --mass=500.0 --apix=0.86 \

--classkeep=0.5 --classautomask --prethreshold --m3dkeep=0.7 \

--parallel=mpi:${SLURM_NTASKS:=1}:/lscratch/${SLURM_JOB_ID} \

--threads ${SLURM_CPUS_PER_TASK:=1} \

--automaskexpand=-1 --ampcorrect=auto

e2bdb.py -cF

Then submit, using the proper partition and allocating matching resources:

$ sbatch --partition=multinode --cpus-per-task=1 --ntasks=512 --gres=lscratch:100 --mem-per-cpu=4g --time=1-00:00:00 EMAN2.sh

MPI parallelization in EMAN2 is limited to no more than 1024 MPI tasks.