| 1999 | NIH Biowulf Cluster started with 40 "boxes on shelves". CHARMm and Blast running on cluster. 14 active users |

| 2000 | 1st scientific paper citing Biowulf First batch of 16 Myrinet nodes added to cluster. Swarm developed in-house to submit large numbers of independent jobs to the cluster.

|

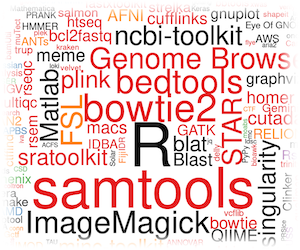

| 2001 | Blat, GAMESS, Gauss, Amber,

R available on cluster. PBS Pro batch system. |

| 2002 | New login node 80 nodes added to cluster. Mysql databases available to users NAMD installed

|

| 2003 | New Biowulf website Added 198 'p2800' nodes, including 24 nodes with 4 GB of memory Default user disk quota increased from 32 to 48 GB Myrinet upgrade Smallest memory node on Biowulf has 512 MB RAM First benchmarking of 64-bit Opteron processor 66 2*2.8 GHz Intel Xeon, 2GB RAM nodes added to cluster |

| 2004 | Added 132 dual-processor nodes 2.8 GHz Intel Xeon with 2-4 GB memory, + 32 AMD Opterons with Myrinet, + 42 AMD Opterons. Cluster reaches 1000+ nodes. Swarm bundling option for 1000s of short jobs Adios Telnet!

|

| 2005 | Added 64 dual-processor AMD Opterons, 2.2. GHz with 4 GB RAM. Nodes upgraded to RHEL 3.1 (Linux 2.6 kernel) New login node, dual-processor 3.2 GHz Xeon, 4 GB memory Quad-core 2.2 GHz 64-bit AMD Opteron for interactive jobs |

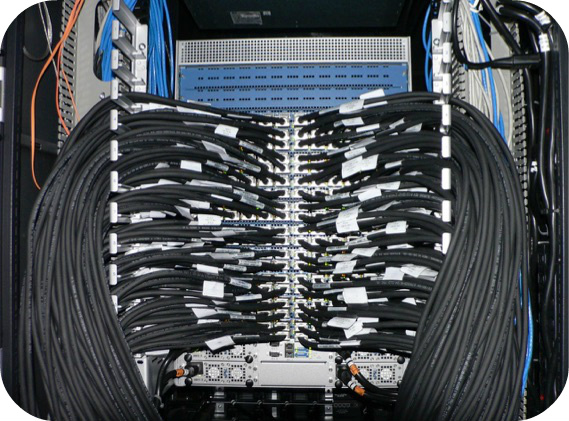

| 2006 | Added 324 dual-processor, 2.8 GHz AMD Opterons with 4 GB memory. Added 40 dual-core, 2.6 GHz AMD Opterons with 8 GB memory. Added 64 Infiniband-connected nodes. Firebolt, an SGI Altix 350 with 32 Itanium-2 processors and 96 GB of memory, is installed as a 'fat node' on Biowulf Biowulf total: 3700 CPUs |

| 2007 | Another 48 Infiniband-connected nodes for a total of 112 IB nodes Per-user limit raised from 16 to 24 IB nodes. 280 2.8 GHz Opterons, 4-8 GB RAM added to cluster

|

| 2008 | Helix transitions from SGI Origin 2400 to a Sun Opteron All nodes go to Centos 5 |

| 2009 | "The NIH Biowulf Cluster: 10 years of Scientific Supercomputing" symposium held at NIH, Bethesda.. Added 224 Infinband-connected nodes, 8 processor, Intel EMT-64 2.8 GHz. Added 16 "Very-large memory nodes", 72 GB memory each + one 512 GB memory node Storage system added which increases capacity by 450 TB.

|

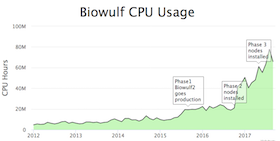

| 2010 | Myrinet nodes decommissioned All Biowulf nodes now 64-bit Added 336 Intel quad-core Nehalem nodes, 2.67 GHz, 24 GB memory + 16 nodes with 72 GB RAM 16 Pilot GPU nodes added to cluster. Biowulf has a total of 9000 cores. |

| 2011 | Helix transitioned to 64-processor, 1 TB memory hardware. Added 328 compute nodes, 2* Intel 2.8 GHz X56660 processors, 24 GB memory. |

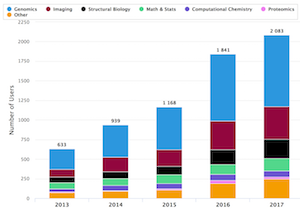

| 2013 | Annual Biowulf account renewals implemented Environment modules implemented for scientific applications |

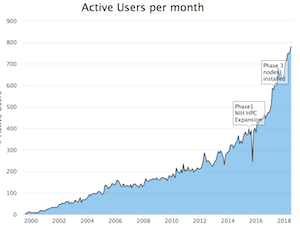

| 2014 | NCI and

NIMH fund nodes on the cluster Major network upgrades increasing bandwidth between HPC systems and NIH core 24 NIH ICs, 250 PIs, 620 users |

| 2015 | Storage reaches 3 PB NCI and NIDDK fund additional nodes 1000th scientific paper published citing Biowulf Phase 1 HPC upgrade installed Transition to Slurm batch system Biowulf2 goes production in July Webservers migrated to new hardware Dedicated data-transfer nodes installed 400+ scientific applications installed and updated

|

| 2016 | New 'HPC account' process: all users get access to both Helix and Biowulf Monthly Walk-in Consults started 30,000 cores added 3 PB disk storage added All new nodes on FDR Infiniband Singularity containers on Biowulf |

| 2017 | Phase 3 goes online, with: 30,000 additional cores 72 2*K80 GPU nodes 1.5 TB memory nodes 3 TB memory nodes All new nodes on EDR Infiniband

HPC staff develops new classes on Object storage, Singularity and Snakemake NIH Director's Award for HPC Expansion Team |

| 2018 | Biowulf Seminar Series 48 P100 nVidia GPU nodes added to cluster, each with 4 GPUs 2000th paper published based on Biowulf usage Biowulf cluster migrated to Centos7 Four V100 nVidia GPU nodes added to cluster, each with 4 GPUs 'Intro to Biowulf' becomes an online class Helix becomes the Interactive Data Transfer system, and moves to newer faster hardware.

|

| 2019 | Biowulf 20th anniversary Seminar Series Additional SSDs added allowing up to 2.4 TB of local scratch disk allocations 2500th paper published based on Biowulf usage

New classes developed and taught:

|

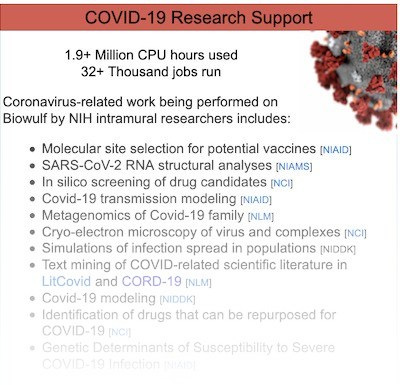

| 2020 |

21000+ CPUs added to Biowulf, including: 56 GPU nodes with Nvidia V100 GPUs, 4 per node, Nvlink Skylake processors, 384 GB memory and 3.2/1.6 TB SSDs 3000th paper published based on Biowulf usage Biowulf supports COVID-19 research Monthly Zoom-In Consults started

|

| 2021 |

4000th paper published based on Biowulf usage Interactive Krona-based visualizations of data directories made available to users New visualization partition with GPU-accelerated graphics

|

| 2022 |

9000 additional Epyc CPUs added to cluster 88 A100 GPUs (22 GPU nodes) added to Biowulf

|

| 2023 |

Biowulf migrated to RHEL8/Rocky8 5000th paper published based on Biowulf usage

|

| 2024 |

Biowulf 25th Anniversary Seminar Series 6000th paper published based on Biowulf usage

Additional nodes added, including:

|

| 2025 |

Began hosting Open OnDemand Hosted the 2025 Biowulf Seminar Series Enabled the processing of PII/PHI data using a security-compliant partition

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|