Tensorboard on Biowulf

The computations you'll use TensorFlow for - like training a massive deep neural network - can be complex and confusing. To make it easier to understand, debug, and optimize TensorFlow programs, the tensorflow developers included a suite of visualization tools called TensorBoard. You can use TensorBoard to visualize your TensorFlow graph, plot quantitative metrics about the execution of your graph, and show additional data like images that pass through it.

Documentation

Starting a Tensorboard Instance

This can be done by submitting either a batch or interactive job.

This guide will demonstrate the latter. Note that the script that runs the deep

learning training and testing must specify the use of tensorboard summaries . For details please see

the Tensorboard main site.

Allocate an interactive session and

start a Tensorflow MNIST training and Tensorboard instance as shown below.

[user@biowulf]$ sinteractive --tunnel -c 8 --mem 30g --gres=gpu:k80:1,lscratch:20

salloc.exe: Pending job allocation 26710013

salloc.exe: job 26710013 queued and waiting for resources

salloc.exe: job 26710013 has been allocated resources

salloc.exe: Granted job allocation 26710013

salloc.exe: Waiting for resource configuration

salloc.exe: Nodes cn3094 are ready for job

Created 1 generic SSH tunnel(s) from this compute node to

biowulf for your use at port numbers defined

in the $PORTn ($PORT1, ...) environment variables.

Please create a SSH tunnel from your workstation to these ports on biowulf.

On Linux/MacOS, open a terminal and run:

ssh -L 45000:localhost:45000 biowulf.nih.gov

For Windows instructions, see https://hpc.nih.gov/docs/tunneling

[user@cn3144]$ echo $PORT1

45000

[user@cn3144]$ cd /lscratch/$SLURM_JOB_ID

[user@cn3144]$ export TMPDIR=/lscratch/$SLURM_JOB_ID

[user@cn3144]$ module load python/3.10

[user@cn3144]$ # Clone the tensorflow github repo

[user@cn3144]$ git clone https://github.com/tensorflow/tensorflow.git

Cloning into 'tensorflow'...

remote: Enumerating objects: 16, done.

remote: Counting objects: 100% (16/16), done.

remote: Compressing objects: 100% (16/16), done.

remote: Total 531395 (delta 2), reused 11 (delta 0), pack-reused 531379

Receiving objects: 100% (531395/531395), 308.15 MiB | 36.91 MiB/s, done.

Resolving deltas: 100% (427138/427138), done.

[user@cn3144]$ # Let's start training the mnist model with tensorboard summaries specifying

# where to write the log files. The script (mnist_with_summaries.py) is a

# modified mnist script (original: mnist.py) for tensorboard use.

[user@cn3144]$ python tensorflow/tensorflow/examples/tutorials/mnist/mnist_with_summaries.py --log_dir=./logs

/usr/local/Anaconda/envs/py3.6/lib/python3.6/site-packages/h5py/__init__.py:36: FutureWarning: Conversion of the second argument of issubdtype from `float` to `np.floating` is deprecated. In future, it will be treated as `np.float64 == np.dtype(float).type`.

from ._conv import register_converters as _register_converters

WARNING:tensorflow:From tensorflow/tensorflow/examples/tutorials/mnist/mnist_with_summaries.py:41: read_data_sets (from tensorflow.contrib.learn.python.learn.datasets.mnist) is deprecated and will be removed in a future version.

Instructions for updating:

Please use alternatives such as official/mnist/dataset.py from tensorflow/models.

WARNING:tensorflow:From /usr/local/Anaconda/envs/py3.6/lib/python3.6/site-packages/tensorflow/contrib/learn/python/learn/datasets/mnist.py:260: maybe_download (from tensorflow.contrib.learn.python.learn.datasets.base) is deprecated and will be removed in a future version.

Instructions for updating:

Please write your own downloading logic.

WARNING:tensorflow:From /usr/local/Anaconda/envs/py3.6/lib/python3.6/site-packages/tensorflow/contrib/learn/python/learn/datasets/mnist.py:262: extract_images (from tensorflow.contrib.learn.python.learn.datasets.mnist) is deprecated and will be removed in a future version.

Instructions for updating:

Please use tf.data to implement this functionality.

Extracting /tmp/tensorflow/mnist/input_data/train-images-idx3-ubyte.gz

WARNING:tensorflow:From /usr/local/Anaconda/envs/py3.6/lib/python3.6/site-packages/tensorflow/contrib/learn/python/learn/datasets/mnist.py:267: extract_labels (from tensorflow.contrib.learn.python.learn.datasets.mnist) is deprecated and will be removed in a future version.

Instructions for updating:

Please use tf.data to implement this functionality.

Extracting /tmp/tensorflow/mnist/input_data/train-labels-idx1-ubyte.gz

Extracting /tmp/tensorflow/mnist/input_data/t10k-images-idx3-ubyte.gz

Extracting /tmp/tensorflow/mnist/input_data/t10k-labels-idx1-ubyte.gz

WARNING:tensorflow:From /usr/local/Anaconda/envs/py3.6/lib/python3.6/site-packages/tensorflow/contrib/learn/python/learn/datasets/mnist.py:290: DataSet.__init__ (from tensorflow.contrib.learn.python.learn.datasets.mnist) is deprecated and will be removed in a future version.

Instructions for updating:

Please use alternatives such as official/mnist/dataset.py from tensorflow/models.

2019-02-26 09:49:58.979015: I tensorflow/core/platform/cpu_feature_guard.cc:141] Your CPU supports instructions that this TensorFlow binary was not compiled to use: SSE4.1 SSE4.2 AVX

2019-02-26 09:49:59.695498: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1432] Found device 0 with properties:

name: Tesla K20Xm major: 3 minor: 5 memoryClockRate(GHz): 0.732

pciBusID: 0000:27:00.0

totalMemory: 5.94GiB freeMemory: 5.87GiB

2019-02-26 09:49:59.695600: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1511] Adding visible gpu devices: 0

2019-02-26 09:50:00.178254: I tensorflow/core/common_runtime/gpu/gpu_device.cc:982] Device interconnect StreamExecutor with strength 1 edge matrix:

2019-02-26 09:50:00.178322: I tensorflow/core/common_runtime/gpu/gpu_device.cc:988] 0

2019-02-26 09:50:00.178348: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1001] 0: N

2019-02-26 09:50:00.178676: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1115] Created TensorFlow device (/job:localhost/replica:0/task:0/device:GPU:0 with 5662 MB memory) -> physical GPU (device: 0, name: Tesla K20Xm, pci bus id: 0000:27:00.0, compute capability: 3.5)

Accuracy at step 0: 0.0926

Accuracy at step 10: 0.695

Accuracy at step 20: 0.8179

Accuracy at step 30: 0.8716

Accuracy at step 40: 0.8849

Accuracy at step 50: 0.8971

[...]

Accuracy at step 940: 0.9654

Accuracy at step 950: 0.9637

Accuracy at step 960: 0.9685

Accuracy at step 970: 0.9684

Accuracy at step 980: 0.968

Accuracy at step 990: 0.9689

Adding run metadata for 999

# $PORT1 environment set with the --tunnel option in sinteractive

[user@cn3144]$ tensorboard --logdir=./logs --port=$PORT1 --host=localhost

/usr/local/Anaconda/envs/py3.6/lib/python3.6/site-packages/h5py/__init__.py:36: FutureWarning: Conversion of the second argument of issubdtype from `float` to `np.floating` is deprecated. In future, it will be treated as `np.float64 == np.dtype(float).type`.

from ._conv import register_converters as _register_converters

TensorBoard 1.10.0 at http://cn0619:45000 (Press CTRL+C to quit)

The port must be unique to avoid clashing with other users. Keep this open for as long as you're using tensorboard.

Note: In the unlikely event that this port is already in use on the compute node, please

select another random port.

Connecting to Your Tensorboard Instance

Connecting to your Tensorboard instance on a compute node with your local

browser requires a tunnel. How to set up the tunnel depends on your local

workstation. If the port selected above

is already in use on biowulf you will get an error. Please select another random

port, restart Tensorboard on that port, and try tunneling again.

From your local machine, do the following:

[user@workstation]$ ssh -L 45000:localhost:45000 biowulf.nih.gov

After entering your password,the tunnel will have been established. The link

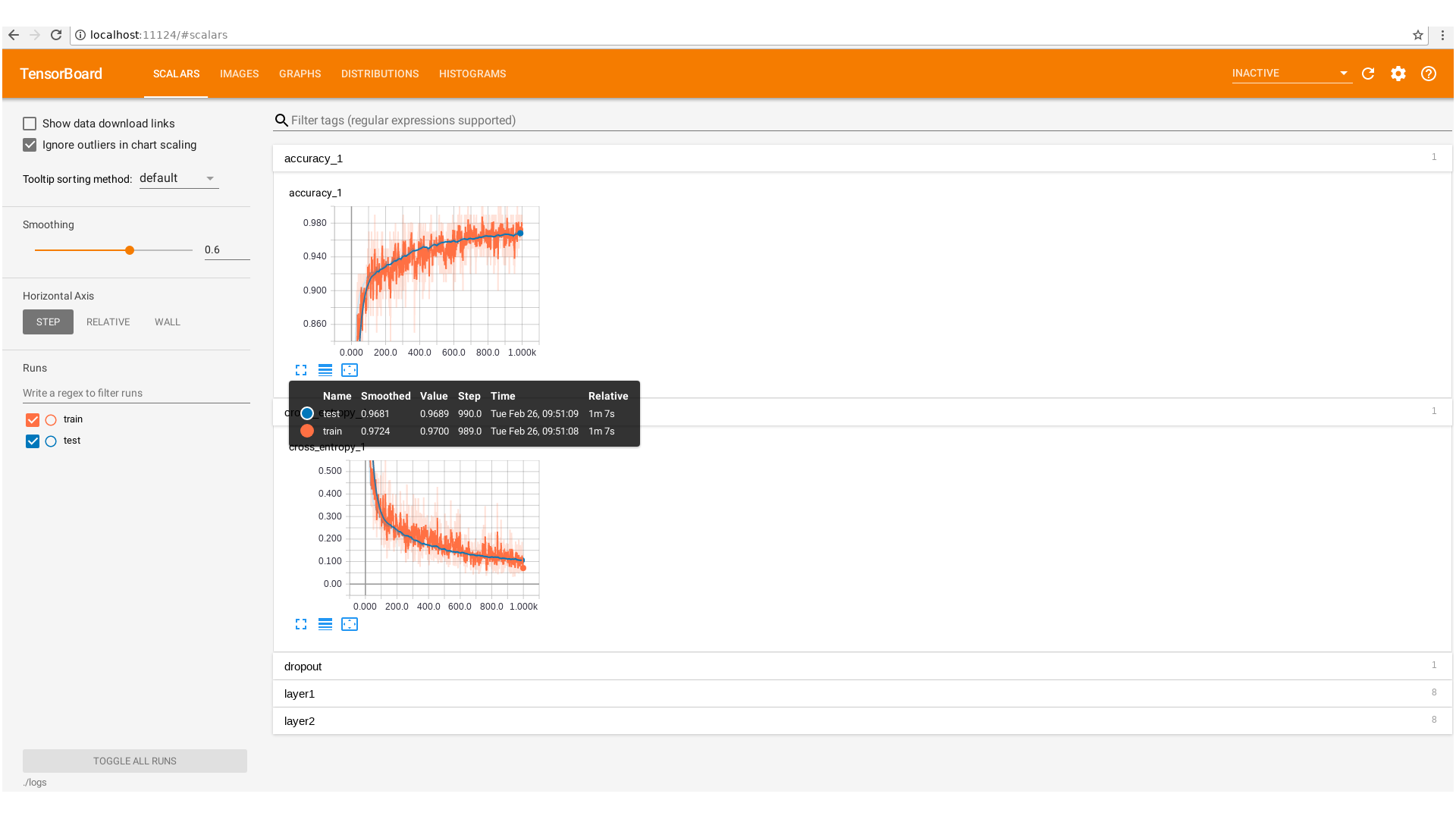

localhost:45000 will now work if you paste it into your web browser.

For setting up a tunnel from your desktop to a compute node with putty, please see https://hpc.nih.gov/docs/tunneling/

Open a web browser and use localhost:45000 for the address (modify port accordingly).